Dean Martinã¢â‚¬â„¢s â€ëœbaby, Itã¢â‚¬â„¢s Cold Outsideã¢â‚¬â„¢ Hits Top 10 on Digital Sales Chart for First Time

A Tutorial on Information Representation

Integers, Floating-betoken Numbers, and Characters

Number Systems

Homo beings utilize decimal (base of operations x) and duodecimal (base 12) number systems for counting and measurements (probably considering we have 10 fingers and ii big toes). Computers apply binary (base 2) number system, as they are made from binary digital components (known as transistors) operating in two states - on and off. In calculating, we also use hexadecimal (base of operations xvi) or octal (base 8) number systems, equally a compact form for representing binary numbers.

Decimal (Base 10) Number System

Decimal number organization has ten symbols: 0, i, ii, 3, iv, five, 6, 7, viii, and 9, called digitdue south. It uses positional notation. That is, the least-pregnant digit (right-virtually digit) is of the order of x^0 (units or ones), the second correct-almost digit is of the order of 10^1 (tens), the third right-virtually digit is of the order of 10^2 (hundreds), and and then on, where ^ denotes exponent. For example,

735 = 700 + 30 + 5 = 7×x^2 + 3×10^i + v×10^0

We shall announce a decimal number with an optional suffix D if ambiguity arises.

Binary (Base 2) Number Organization

Binary number system has two symbols: 0 and 1, called bits. It is as well a positional notation, for example,

10110B = 10000B + 0000B + 100B + 10B + 0B = ane×2^4 + 0×2^3 + 1×2^two + 1×2^1 + 0×2^0

Nosotros shall announce a binary number with a suffix B. Some programming languages denote binary numbers with prefix 0b or 0B (e.g., 0b1001000), or prefix b with the bits quoted (e.g., b'10001111').

A binary digit is called a bit. Viii $.25 is called a byte (why eight-chip unit? Probably because 8=2iii ).

Hexadecimal (Base 16) Number System

Hexadecimal number organization uses xvi symbols: 0, 1, ii, 3, 4, five, half dozen, 7, 8, nine, A, B, C, D, E, and F, called hex digits. It is a positional annotation, for example,

A3EH = A00H + 30H + EH = 10×16^2 + 3×16^i + 14×16^0

Nosotros shall denote a hexadecimal number (in short, hex) with a suffix H. Some programming languages denote hex numbers with prefix 0x or 0X (e.g., 0x1A3C5F), or prefix ten with hex digits quoted (due east.g., ten'C3A4D98B').

Each hexadecimal digit is also called a hex digit. Almost programming languages accept lowercase 'a' to 'f' as well as upper-case letter 'A' to 'F'.

Computers uses binary organisation in their internal operations, every bit they are built from binary digital electronic components with 2 states - on and off. Yet, writing or reading a long sequence of binary $.25 is cumbersome and fault-prone (endeavour to read this binary cord: 1011 0011 0100 0011 0001 1101 0001 1000B, which is the same equally hexadecimal B343 1D18H). Hexadecimal arrangement is used as a compact form or shorthand for binary bits. Each hex digit is equivalent to 4 binary $.25, i.e., shorthand for 4 $.25, as follows:

| Hexadecimal | Binary | Decimal |

|---|---|---|

| 0 | 0000 | 0 |

| ane | 0001 | one |

| 2 | 0010 | 2 |

| 3 | 0011 | 3 |

| 4 | 0100 | 4 |

| 5 | 0101 | v |

| 6 | 0110 | 6 |

| seven | 0111 | 7 |

| 8 | 1000 | 8 |

| 9 | 1001 | 9 |

| A | 1010 | x |

| B | 1011 | 11 |

| C | 1100 | 12 |

| D | 1101 | thirteen |

| E | 1110 | 14 |

| F | 1111 | fifteen |

Conversion from Hexadecimal to Binary

Supercede each hex digit past the 4 equivalent bits (as listed in the in a higher place tabular array), for examples,

A3C5H = 1010 0011 1100 0101B 102AH = 0001 0000 0010 1010B

Conversion from Binary to Hexadecimal

Starting from the right-most chip (least-pregnant bit), replace each group of 4 bits by the equivalent hex digit (pad the left-almost bits with zero if necessary), for examples,

1001001010B = 0010 0100 1010B = 24AH 10001011001011B = 0010 0010 1100 1011B = 22CBH

Information technology is of import to note that hexadecimal number provides a compact form or shorthand for representing binary $.25.

Conversion from Base r to Decimal (Base of operations x)

Given a n-digit base of operations r number: dn-1dn-2dnorthward-3...d2doned0 (base of operations r), the decimal equivalent is given by:

dn-ane×rnorthward-one + dn-ii×rn-2 + ... + d1×r1 + d0×r0

For examples,

A1C2H = 10×sixteen^iii + one×sixteen^two + 12×16^one + two = 41410 (base ten) 10110B = 1×two^4 + i×two^2 + 1×2^1 = 22 (base 10)

Conversion from Decimal (Base ten) to Base r

Use repeated segmentation/remainder. For instance,

To convert 261(base x) to hexadecimal: 261/16 => caliber=sixteen remainder=5 16/16 => quotient=one residue=0 i/16 => caliber=0 remainder=one (caliber=0 stop) Hence, 261D = 105H (Collect the hex digits from the balance in reverse order)

The above procedure is actually applicable to conversion between whatever 2 base systems. For example,

To catechumen 1023(base 4) to base 3: 1023(base 4)/three => quotient=25D residue=0 25D/three => caliber=8D residuum=one 8D/3 => quotient=2D residuum=2 2D/3 => quotient=0 balance=2 (quotient=0 stop) Hence, 1023(base 4) = 2210(base of operations 3)

Conversion between Two Number Systems with Partial Part

- Separate the integral and the fractional parts.

- For the integral office, dissever by the target radix repeatably, and collect the ramainder in reverse order.

- For the fractional role, multiply the fractional office by the target radix repeatably, and collect the integral part in the same order.

Example 1: Decimal to Binary

Catechumen 18.6875D to binary Integral Function = 18D 18/2 => caliber=ix residuum=0 9/2 => quotient=4 balance=1 iv/ii => quotient=two residual=0 2/two => quotient=1 residue=0 1/2 => caliber=0 residuum=1 (quotient=0 cease) Hence, 18D = 10010B Fractional Role = .6875D .6875*2=1.375 => whole number is 1 .375*two=0.75 => whole number is 0 .75*2=1.5 => whole number is 1 .5*two=1.0 => whole number is 1 Hence .6875D = .1011B Combine, 18.6875D = 10010.1011B

Example 2: Decimal to Hexadecimal

Catechumen xviii.6875D to hexadecimal Integral Role = 18D xviii/16 => caliber=1 remainder=2 1/16 => caliber=0 remainder=1 (caliber=0 terminate) Hence, 18D = 12H Fractional Role = .6875D .6875*sixteen=eleven.0 => whole number is 11D (BH) Hence .6875D = .BH Combine, eighteen.6875D = 12.BH

Exercises (Number Systems Conversion)

- Catechumen the following decimal numbers into binary and hexadecimal numbers:

-

108 -

4848 -

9000

-

- Catechumen the following binary numbers into hexadecimal and decimal numbers:

-

1000011000 -

10000000 -

101010101010

-

- Convert the following hexadecimal numbers into binary and decimal numbers:

-

ABCDE -

1234 -

80F

-

- Convert the following decimal numbers into binary equivalent:

-

xix.25D -

123.456D

-

Answers: You could utilise the Windows' Estimator (calc.exe) to acquit out number organisation conversion, by setting it to the Developer or scientific mode. (Run "calc" ⇒ Select "Settings" menu ⇒ Choose "Programmer" or "Scientific" style.)

-

1101100B,1001011110000B,10001100101000B,6CH,12F0H,2328H. -

218H,80H,AAAH,536D,128D,2730D. -

10101011110011011110B,1001000110100B,100000001111B,703710D,4660D,2063D. - ?? (You piece of work it out!)

Computer Memory & Data Representation

Computer uses a stock-still number of bits to represent a piece of data, which could exist a number, a graphic symbol, or others. A n-bit storage location tin can represent up to 2^northward distinct entities. For instance, a 3-fleck retention location can concord i of these eight binary patterns: 000, 001, 010, 011, 100, 101, 110, or 111. Hence, it can correspond at most viii distinct entities. You could use them to correspond numbers 0 to seven, numbers 8881 to 8888, characters 'A' to 'H', or upward to eight kinds of fruits like apple, orange, banana; or upwardly to 8 kinds of animals like lion, tiger, etc.

Integers, for example, can be represented in eight-chip, 16-bit, 32-bit or 64-bit. Yous, as the programmer, choose an appropriate fleck-length for your integers. Your choice will impose constraint on the range of integers that tin can be represented. As well the chip-length, an integer can be represented in various representation schemes, east.g., unsigned vs. signed integers. An 8-flake unsigned integer has a range of 0 to 255, while an 8-bit signed integer has a range of -128 to 127 - both representing 256 singled-out numbers.

It is important to notation that a computer memory location merely stores a binary pattern. It is entirely upwardly to yous, as the programmer, to decide on how these patterns are to exist interpreted. For example, the 8-bit binary pattern "0100 0001B" can be interpreted as an unsigned integer 65, or an ASCII character 'A', or some secret information known only to yous. In other words, yous have to offset decide how to represent a piece of data in a binary pattern earlier the binary patterns make sense. The interpretation of binary pattern is chosen information representation or encoding. Furthermore, it is important that the data representation schemes are agreed-upon by all the parties, i.eastward., industrial standards demand to exist formulated and straightly followed.

In one case you decided on the data representation scheme, sure constraints, in particular, the precision and range will be imposed. Hence, it is important to understand data representation to write right and high-performance programs.

Rosette Rock and the Decipherment of Egyptian Hieroglyphs

Egyptian hieroglyphs (next-to-left) were used past the ancient Egyptians since 4000BC. Unfortunately, since 500AD, no ane could longer read the ancient Egyptian hieroglyphs, until the re-discovery of the Rosette Stone in 1799 by Napoleon's troop (during Napoleon's Egyptian invasion) nigh the town of Rashid (Rosetta) in the Nile Delta.

The Rosetta Rock (left) is inscribed with a decree in 196BC on behalf of King Ptolemy V. The prescript appears in three scripts: the upper text is Ancient Egyptian hieroglyphs, the centre portion Demotic script, and the everyman Ancient Greek. Considering it presents essentially the aforementioned text in all three scripts, and Ancient Greek could still be understood, it provided the key to the decipherment of the Egyptian hieroglyphs.

The moral of the story is unless you know the encoding scheme, there is no way that you can decode the information.

Reference and images: Wikipedia.

Integer Representation

Integers are whole numbers or fixed-betoken numbers with the radix betoken stock-still after the least-significant fleck. They are contrast to real numbers or floating-point numbers, where the position of the radix point varies. Information technology is important to take notation that integers and floating-bespeak numbers are treated differently in computers. They accept different representation and are candy differently (due east.grand., floating-indicate numbers are processed in a so-called floating-point processor). Floating-signal numbers volition be discussed later on.

Computers use a fixed number of bits to correspond an integer. The commonly-used bit-lengths for integers are 8-bit, xvi-bit, 32-bit or 64-bit. Besides bit-lengths, in that location are two representation schemes for integers:

- Unsigned Integers: can represent goose egg and positive integers.

- Signed Integers: can represent zippo, positive and negative integers. Three representation schemes had been proposed for signed integers:

- Sign-Magnitude representation

- 1'southward Complement representation

- 2'southward Complement representation

Y'all, as the developer, demand to decide on the fleck-length and representation scheme for your integers, depending on your application'south requirements. Suppose that you need a counter for counting a small quantity from 0 up to 200, you might choose the 8-bit unsigned integer scheme every bit there is no negative numbers involved.

north-fleck Unsigned Integers

Unsigned integers can represent zero and positive integers, but not negative integers. The value of an unsigned integer is interpreted as "the magnitude of its underlying binary pattern".

Example 1: Suppose that due north=8 and the binary pattern is 0100 0001B, the value of this unsigned integer is 1×2^0 + 1×2^6 = 65D.

Example 2: Suppose that north=sixteen and the binary design is 0001 0000 0000 1000B, the value of this unsigned integer is 1×2^3 + 1×two^12 = 4104D.

Example 3: Suppose that due north=sixteen and the binary pattern is 0000 0000 0000 0000B, the value of this unsigned integer is 0.

An n-fleck design can represent ii^northward singled-out integers. An n-bit unsigned integer tin represent integers from 0 to (2^due north)-i, equally tabulated below:

| n | Minimum | Maximum |

|---|---|---|

| 8 | 0 | (2^8)-1 (=255) |

| 16 | 0 | (2^16)-i (=65,535) |

| 32 | 0 | (ii^32)-ane (=iv,294,967,295) (9+ digits) |

| 64 | 0 | (ii^64)-1 (=18,446,744,073,709,551,615) (19+ digits) |

Signed Integers

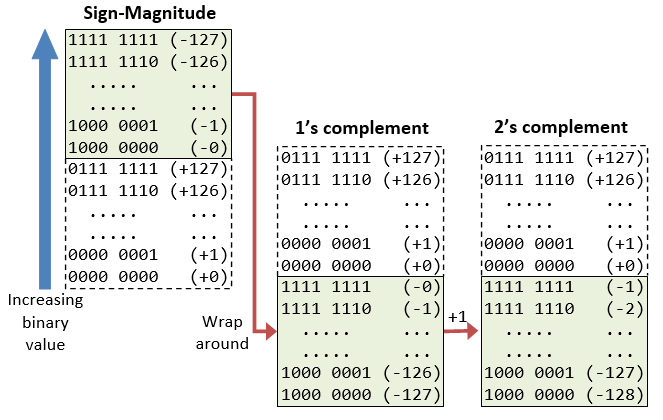

Signed integers tin can represent zero, positive integers, as well as negative integers. Three representation schemes are available for signed integers:

- Sign-Magnitude representation

- 1's Complement representation

- 2's Complement representation

In all the above three schemes, the most-significant scrap (msb) is chosen the sign bit. The sign fleck is used to correspond the sign of the integer - with 0 for positive integers and 1 for negative integers. The magnitude of the integer, nevertheless, is interpreted differently in different schemes.

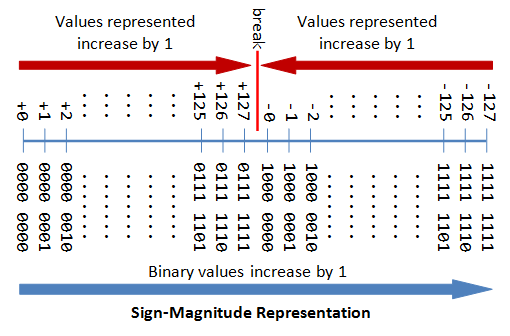

n-bit Sign Integers in Sign-Magnitude Representation

In sign-magnitude representation:

- The well-nigh-pregnant bit (msb) is the sign bit, with value of 0 representing positive integer and 1 representing negative integer.

- The remaining n-1 $.25 represents the magnitude (absolute value) of the integer. The absolute value of the integer is interpreted as "the magnitude of the (due north-one)-bit binary pattern".

Example 1: Suppose that n=eight and the binary representation is 0 100 0001B.

Sign fleck is 0 ⇒ positive

Accented value is 100 0001B = 65D

Hence, the integer is +65D

Case ii: Suppose that northward=eight and the binary representation is 1 000 0001B.

Sign bit is 1 ⇒ negative

Absolute value is 000 0001B = 1D

Hence, the integer is -1D

Case iii: Suppose that n=8 and the binary representation is 0 000 0000B.

Sign scrap is 0 ⇒ positive

Absolute value is 000 0000B = 0D

Hence, the integer is +0D

Case iv: Suppose that northward=8 and the binary representation is 1 000 0000B.

Sign scrap is 1 ⇒ negative

Absolute value is 000 0000B = 0D

Hence, the integer is -0D

The drawbacks of sign-magnitude representation are:

- There are ii representations (

0000 0000Bandk 0000B) for the number null, which could lead to inefficiency and confusion. - Positive and negative integers need to be processed separately.

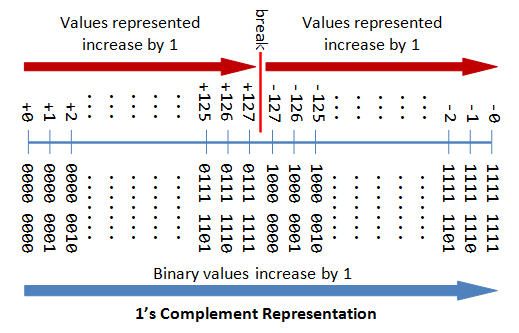

northward-fleck Sign Integers in 1'southward Complement Representation

In 1's complement representation:

- Again, the most significant bit (msb) is the sign fleck, with value of 0 representing positive integers and 1 representing negative integers.

- The remaining n-ane bits represents the magnitude of the integer, as follows:

- for positive integers, the absolute value of the integer is equal to "the magnitude of the (n-one)-bit binary pattern".

- for negative integers, the absolute value of the integer is equal to "the magnitude of the complement (inverse) of the (n-one)-bit binary blueprint" (hence called 1's complement).

Example 1: Suppose that n=8 and the binary representation 0 100 0001B.

Sign bit is 0 ⇒ positive

Absolute value is 100 0001B = 65D

Hence, the integer is +65D

Instance 2: Suppose that northward=8 and the binary representation 1 000 0001B.

Sign fleck is 1 ⇒ negative

Absolute value is the complement of 000 0001B, i.e., 111 1110B = 126D

Hence, the integer is -126D

Case 3: Suppose that due north=8 and the binary representation 0 000 0000B.

Sign scrap is 0 ⇒ positive

Accented value is 000 0000B = 0D

Hence, the integer is +0D

Example 4: Suppose that n=8 and the binary representation 1 111 1111B.

Sign flake is one ⇒ negative

Absolute value is the complement of 111 1111B, i.due east., 000 0000B = 0D

Hence, the integer is -0D

Once more, the drawbacks are:

- There are two representations (

0000 0000Band1111 1111B) for zero. - The positive integers and negative integers need to be processed separately.

n-bit Sign Integers in 2's Complement Representation

In 2'south complement representation:

- Once more, the well-nigh significant bit (msb) is the sign fleck, with value of 0 representing positive integers and 1 representing negative integers.

- The remaining n-1 bits represents the magnitude of the integer, every bit follows:

- for positive integers, the absolute value of the integer is equal to "the magnitude of the (northward-1)-chip binary pattern".

- for negative integers, the absolute value of the integer is equal to "the magnitude of the complement of the (n-1)-bit binary design plus one" (hence called 2'due south complement).

Example 1: Suppose that n=eight and the binary representation 0 100 0001B.

Sign bit is 0 ⇒ positive

Absolute value is 100 0001B = 65D

Hence, the integer is +65D

Example 2: Suppose that north=eight and the binary representation 1 000 0001B.

Sign bit is 1 ⇒ negative

Absolute value is the complement of 000 0001B plus ane, i.e., 111 1110B + 1B = 127D

Hence, the integer is -127D

Case 3: Suppose that n=8 and the binary representation 0 000 0000B.

Sign bit is 0 ⇒ positive

Absolute value is 000 0000B = 0D

Hence, the integer is +0D

Instance 4: Suppose that n=8 and the binary representation ane 111 1111B.

Sign fleck is 1 ⇒ negative

Accented value is the complement of 111 1111B plus 1, i.e., 000 0000B + 1B = 1D

Hence, the integer is -1D

Computers use 2's Complement Representation for Signed Integers

We have discussed three representations for signed integers: signed-magnitude, 1's complement and 2's complement. Computers utilize 2'south complement in representing signed integers. This is because:

- At that place is simply one representation for the number zip in 2's complement, instead of two representations in sign-magnitude and i's complement.

- Positive and negative integers tin exist treated together in add-on and subtraction. Subtraction tin can exist carried out using the "improver logic".

Example 1: Addition of Two Positive Integers: Suppose that n=8, 65D + 5D = 70D

65D → 0100 0001B 5D → 0000 0101B(+ 0100 0110B → 70D (OK)

Example 2: Subtraction is treated as Improver of a Positive and a Negative Integers: Suppose that n=viii, 5D - 5D = 65D + (-5D) = 60D

65D → 0100 0001B -5D → 1111 1011B(+ 0011 1100B → 60D (discard carry - OK)

Example 3: Addition of Two Negative Integers: Suppose that northward=8, -65D - 5D = (-65D) + (-5D) = -70D

-65D → 1011 1111B -5D → 1111 1011B(+ 1011 1010B → -70D (discard carry - OK)

Because of the fixed precision (i.e., stock-still number of bits), an due north-flake ii'due south complement signed integer has a certain range. For example, for n=8, the range of 2's complement signed integers is -128 to +127. During add-on (and subtraction), it is important to cheque whether the result exceeds this range, in other words, whether overflow or underflow has occurred.

Case 4: Overflow: Suppose that n=8, 127D + 2D = 129D (overflow - beyond the range)

127D → 0111 1111B 2D → 0000 0010B(+ thou 0001B → -127D (wrong)

Case v: Underflow: Suppose that n=8, -125D - 5D = -130D (underflow - below the range)

-125D → grand 0011B -5D → 1111 1011B(+ 0111 1110B → +126D (wrong)

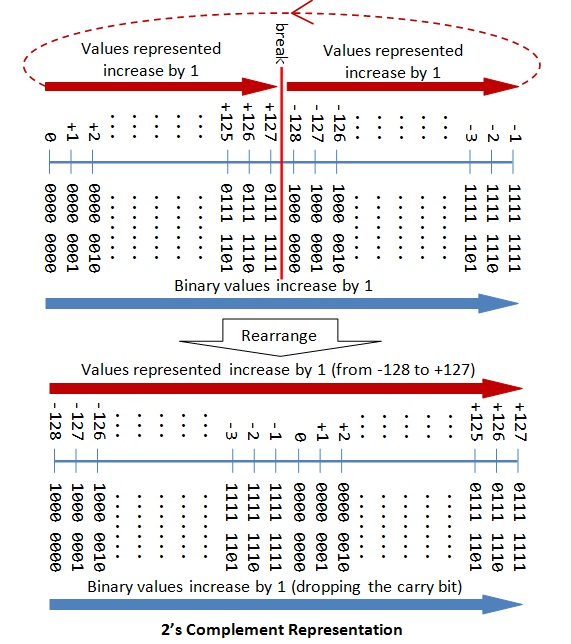

The following diagram explains how the 2's complement works. By re-arranging the number line, values from -128 to +127 are represented contiguously by ignoring the carry fleck.

Range of due north-bit 2's Complement Signed Integers

An northward-bit 2's complement signed integer can represent integers from -2^(due north-ane) to +2^(north-one)-1, as tabulated. Have note that the scheme tin can represent all the integers within the range, without any gap. In other words, in that location is no missing integers within the supported range.

| due north | minimum | maximum |

|---|---|---|

| 8 | -(2^7) (=-128) | +(ii^7)-ane (=+127) |

| 16 | -(2^15) (=-32,768) | +(2^15)-1 (=+32,767) |

| 32 | -(2^31) (=-2,147,483,648) | +(2^31)-i (=+2,147,483,647)(ix+ digits) |

| 64 | -(two^63) (=-ix,223,372,036,854,775,808) | +(2^63)-ane (=+9,223,372,036,854,775,807)(18+ digits) |

Decoding 2'due south Complement Numbers

- Check the sign bit (denoted as

Due south). - If

S=0, the number is positive and its absolute value is the binary value of the remaining n-1 bits. - If

South=1, the number is negative. you could "invert the due north-1 bits and plus ane" to get the absolute value of negative number.

Alternatively, y'all could scan the remaining n-ane bits from the correct (least-significant bit). Wait for the first occurrence of 1. Flip all the $.25 to the left of that outset occurrence of 1. The flipped pattern gives the accented value. For example,n = eight, chip pattern = i 100 0100B S = ane → negative Scanning from the right and flip all the bits to the left of the first occurrence of i ⇒ 011 1100B = 60D Hence, the value is -60D

Big Endian vs. Footling Endian

Modern computers shop one byte of data in each memory address or location, i.e., byte addressable memory. An 32-flake integer is, therefore, stored in iv memory addresses.

The term"Endian" refers to the order of storing bytes in computer memory. In "Big Endian" scheme, the about significant byte is stored first in the everyman memory accost (or big in showtime), while "Little Endian" stores the least meaning bytes in the everyman memory accost.

For instance, the 32-bit integer 12345678H (305419896x) is stored every bit 12H 34H 56H 78H in big endian; and 78H 56H 34H 12H in little endian. An xvi-fleck integer 00H 01H is interpreted as 0001H in large endian, and 0100H equally little endian.

Do (Integer Representation)

- What are the ranges of eight-bit, 16-bit, 32-bit and 64-bit integer, in "unsigned" and "signed" representation?

- Give the value of

88,0,one,127, and255in - Give the value of

+88,-88,-one,0,+1,-128, and+127in 8-bit two's complement signed representation. - Give the value of

+88,-88,-1,0,+i,-127, and+127in 8-bit sign-magnitude representation. - Give the value of

+88,-88,-1,0,+i,-127and+127in viii-bit 1'due south complement representation. - [TODO] more than.

Answers

- The range of unsigned n-scrap integers is

[0, 2^n - 1]. The range of northward-bit 2's complement signed integer is[-2^(northward-ane), +2^(n-1)-1]; -

88 (0101 thou),0 (0000 0000),1 (0000 0001),127 (0111 1111),255 (1111 1111). -

+88 (0101 1000),-88 (1010 1000),-1 (1111 1111),0 (0000 0000),+1 (0000 0001),-128 (1000 0000),+127 (0111 1111). -

+88 (0101 yard),-88 (1101 1000),-one (1000 0001),0 (0000 0000 or m 0000),+1 (0000 0001),-127 (1111 1111),+127 (0111 1111). -

+88 (0101 1000),-88 (1010 0111),-1 (1111 1110),0 (0000 0000 or 1111 1111),+1 (0000 0001),-127 (1000 0000),+127 (0111 1111).

Floating-Indicate Number Representation

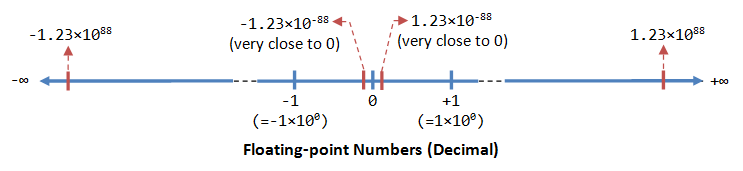

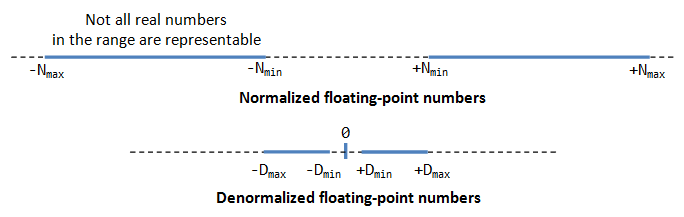

A floating-betoken number (or real number) can stand for a very large (ane.23×10^88) or a very minor (1.23×10^-88) value. It could also correspond very large negative number (-1.23×ten^88) and very small negative number (-1.23×10^88), as well equally zero, as illustrated:

A floating-point number is typically expressed in the scientific note, with a fraction (F), and an exponent (E) of a sure radix (r), in the grade of F×r^E. Decimal numbers apply radix of 10 (F×10^Eastward); while binary numbers use radix of two (F×2^E).

Representation of floating signal number is not unique. For example, the number 55.66 can be represented as 5.566×10^1, 0.5566×10^2, 0.05566×10^3, and and so on. The fractional part can exist normalized. In the normalized class, in that location is only a single not-zero digit before the radix indicate. For case, decimal number 123.4567 tin be normalized every bit 1.234567×10^2; binary number 1010.1011B can exist normalized as 1.0101011B×2^3.

Information technology is important to note that floating-indicate numbers suffer from loss of precision when represented with a fixed number of bits (e.g., 32-bit or 64-bit). This is because in that location are space number of real numbers (even within a small range of says 0.0 to 0.ane). On the other hand, a n-chip binary pattern can represent a finite 2^n singled-out numbers. Hence, non all the real numbers can be represented. The nearest approximation will exist used instead, resulted in loss of accuracy.

Information technology is also of import to note that floating number arithmetic is very much less efficient than integer arithmetic. It could be speed upward with a so-called defended floating-point co-processor. Hence, use integers if your application does not require floating-bespeak numbers.

In computers, floating-point numbers are represented in scientific notation of fraction (F) and exponent (Eastward) with a radix of 2, in the form of F×2^E. Both East and F tin can exist positive as well every bit negative. Mod computers adopt IEEE 754 standard for representing floating-point numbers. There are two representation schemes: 32-chip single-precision and 64-bit double-precision.

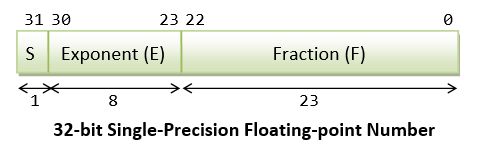

IEEE-754 32-scrap Single-Precision Floating-Point Numbers

In 32-chip single-precision floating-indicate representation:

- The most significant flake is the sign chip (

Southward), with 0 for positive numbers and 1 for negative numbers. - The post-obit 8 bits represent exponent (

Eastward). - The remaining 23 bits represents fraction (

F).

Normalized Form

Let's illustrate with an instance, suppose that the 32-bit blueprint is ane 1000 0001 011 0000 0000 0000 0000 0000 , with:

-

South = 1 -

E = grand 0001 -

F = 011 0000 0000 0000 0000 0000

In the normalized form, the actual fraction is normalized with an implicit leading one in the form of ane.F. In this example, the actual fraction is 1.011 0000 0000 0000 0000 0000 = one + 1×2^-two + one×2^-three = ane.375D.

The sign bit represents the sign of the number, with Due south=0 for positive and S=1 for negative number. In this example with Southward=1, this is a negative number, i.due east., -ane.375D.

In normalized course, the actual exponent is East-127 (so-called excess-127 or bias-127). This is because nosotros need to represent both positive and negative exponent. With an 8-flake E, ranging from 0 to 255, the backlog-127 scheme could provide actual exponent of -127 to 128. In this example, Eastward-127=129-127=2D.

Hence, the number represented is -1.375×2^two=-v.5D.

De-Normalized Grade

Normalized form has a serious problem, with an implicit leading 1 for the fraction, it cannot represent the number zero! Convince yourself on this!

De-normalized form was devised to represent null and other numbers.

For Eastward=0, the numbers are in the de-normalized class. An implicit leading 0 (instead of i) is used for the fraction; and the actual exponent is e'er -126. Hence, the number zero tin can be represented with Due east=0 and F=0 (considering 0.0×2^-126=0).

We can also correspond very modest positive and negative numbers in de-normalized class with E=0. For example, if S=1, Due east=0, and F=011 0000 0000 0000 0000 0000. The actual fraction is 0.011=one×2^-2+i×ii^-3=0.375D. Since S=i, it is a negative number. With E=0, the actual exponent is -126. Hence the number is -0.375×2^-126 = -4.4×ten^-39, which is an extremely small negative number (shut to nix).

Summary

In summary, the value (N) is calculated as follows:

- For

1 ≤ East ≤ 254, North = (-one)^S × i.F × two^(E-127). These numbers are in the and then-called normalized class. The sign-bit represents the sign of the number. Fractional part (1.F) are normalized with an implicit leading ane. The exponent is bias (or in backlog) of127, so equally to correspond both positive and negative exponent. The range of exponent is-126to+127. - For

East = 0, N = (-ane)^S × 0.F × 2^(-126). These numbers are in the so-chosen denormalized grade. The exponent of2^-126evaluates to a very pocket-size number. Denormalized form is needed to represent zero (withF=0andE=0). Information technology can as well represents very small positive and negative number close to zero. - For

East = 255, information technology represents special values, such as±INF(positive and negative infinity) andNaN(not a number). This is beyond the scope of this commodity.

Case 1: Suppose that IEEE-754 32-bit floating-point representation pattern is 0 10000000 110 0000 0000 0000 0000 0000 .

Sign flake South = 0 ⇒ positive number Due east = k 0000B = 128D (in normalized form) Fraction is 1.11B (with an implicit leading 1) = 1 + 1×2^-ane + 1×2^-2 = 1.75D The number is +1.75 × 2^(128-127) = +3.5D

Example 2: Suppose that IEEE-754 32-fleck floating-point representation design is 1 01111110 100 0000 0000 0000 0000 0000 .

Sign bit S = i ⇒ negative number Eastward = 0111 1110B = 126D (in normalized form) Fraction is 1.1B (with an implicit leading i) = i + two^-1 = one.5D The number is -1.5 × 2^(126-127) = -0.75D

Case three: Suppose that IEEE-754 32-bit floating-point representation blueprint is 1 01111110 000 0000 0000 0000 0000 0001 .

Sign bit S = ane ⇒ negative number Eastward = 0111 1110B = 126D (in normalized form) Fraction is one.000 0000 0000 0000 0000 0001B (with an implicit leading ane) = one + ii^-23 The number is -(1 + 2^-23) × 2^(126-127) = -0.500000059604644775390625 (may not be verbal in decimal!)

Instance 4 (De-Normalized Class): Suppose that IEEE-754 32-flake floating-point representation pattern is one 00000000 000 0000 0000 0000 0000 0001 .

Sign chip S = 1 ⇒ negative number E = 0 (in de-normalized form) Fraction is 0.000 0000 0000 0000 0000 0001B (with an implicit leading 0) = ane×2^-23 The number is -2^-23 × 2^(-126) = -2×(-149) ≈ -one.four×10^-45

Exercises (Floating-point Numbers)

- Compute the largest and smallest positive numbers that can be represented in the 32-fleck normalized form.

- Compute the largest and smallest negative numbers can be represented in the 32-flake normalized grade.

- Repeat (1) for the 32-bit denormalized class.

- Repeat (2) for the 32-bit denormalized form.

Hints:

- Largest positive number:

S=0,E=1111 1110 (254),F=111 1111 1111 1111 1111 1111.

Smallest positive number:S=0,Due east=0000 00001 (1),F=000 0000 0000 0000 0000 0000. - Same equally higher up, but

Southward=1. - Largest positive number:

S=0,E=0,F=111 1111 1111 1111 1111 1111.

Smallest positive number:S=0,E=0,F=000 0000 0000 0000 0000 0001. - Aforementioned as above, but

S=i.

Notes For Java Users

You can use JDK methods Float.intBitsToFloat(int $.25) or Double.longBitsToDouble(long $.25) to create a unmarried-precision 32-bit float or double-precision 64-bit double with the specific bit patterns, and print their values. For examples,

Arrangement.out.println(Float.intBitsToFloat(0x7fffff)); System.out.println(Double.longBitsToDouble(0x1fffffffffffffL));

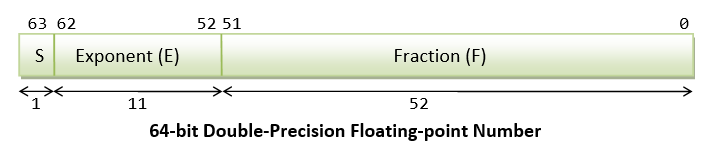

IEEE-754 64-bit Double-Precision Floating-Indicate Numbers

The representation scheme for 64-bit double-precision is similar to the 32-bit single-precision:

- The virtually meaning bit is the sign chip (

S), with 0 for positive numbers and 1 for negative numbers. - The following xi $.25 correspond exponent (

Due east). - The remaining 52 $.25 represents fraction (

F).

The value (Due north) is calculated as follows:

- Normalized form: For

1 ≤ E ≤ 2046, Due north = (-1)^S × 1.F × 2^(E-1023). - Denormalized form: For

East = 0, Due north = (-1)^Due south × 0.F × 2^(-1022). These are in the denormalized form. - For

E = 2047,Due northrepresents special values, such every bit±INF(infinity),NaN(not a number).

More on Floating-Point Representation

There are three parts in the floating-point representation:

- The sign scrap (

South) is self-explanatory (0 for positive numbers and 1 for negative numbers). - For the exponent (

Eastward), a so-called bias (or backlog) is applied then as to represent both positive and negative exponent. The bias is ready at one-half of the range. For single precision with an 8-bit exponent, the bias is 127 (or excess-127). For double precision with a 11-bit exponent, the bias is 1023 (or excess-1023). - The fraction (

F) (also called the mantissa or significand) is composed of an implicit leading flake (before the radix point) and the fractional bits (subsequently the radix indicate). The leading bit for normalized numbers is i; while the leading bit for denormalized numbers is 0.

Normalized Floating-Point Numbers

In normalized grade, the radix bespeak is placed after the first not-zero digit, east,g., 9.8765D×x^-23D, 1.001011B×2^11B. For binary number, the leading bit is always 1, and need non be represented explicitly - this saves one bit of storage.

In IEEE 754'due south normalized grade:

- For unmarried-precision,

one ≤ E ≤ 254with backlog of 127. Hence, the bodily exponent is from-126to+127. Negative exponents are used to represent pocket-size numbers (< 1.0); while positive exponents are used to represent big numbers (> i.0).N = (-1)^Southward × i.F × 2^(East-127) - For double-precision,

1 ≤ Eastward ≤ 2046with backlog of 1023. The bodily exponent is from-1022to+1023, andN = (-one)^S × ane.F × 2^(East-1023)

Take note that north-bit pattern has a finite number of combinations (=2^north), which could represent finite distinct numbers. It is not possible to represent the infinite numbers in the existent axis (even a small range says 0.0 to 1.0 has infinite numbers). That is, not all floating-signal numbers tin be accurately represented. Instead, the closest approximation is used, which leads to loss of accuracy.

The minimum and maximum normalized floating-signal numbers are:

| Precision | Normalized North(min) | Normalized N(max) |

|---|---|---|

| Single | 0080 0000H 0 00000001 00000000000000000000000B Eastward = one, F = 0 N(min) = 1.0B × 2^-126 (≈1.17549435 × 10^-38) | 7F7F FFFFH 0 11111110 00000000000000000000000B Eastward = 254, F = 0 N(max) = ane.1...1B × 2^127 = (2 - 2^-23) × 2^127 (≈3.4028235 × 10^38) |

| Double | 0010 0000 0000 0000H North(min) = 1.0B × 2^-1022 (≈ii.2250738585072014 × 10^-308) | 7FEF FFFF FFFF FFFFH N(max) = 1.1...1B × 2^1023 = (2 - 2^-52) × 2^1023 (≈one.7976931348623157 × ten^308) |

Denormalized Floating-Indicate Numbers

If East = 0, but the fraction is non-cypher, and so the value is in denormalized form, and a leading scrap of 0 is assumed, as follows:

- For single-precision,

E = 0,N = (-i)^S × 0.F × 2^(-126) - For double-precision,

E = 0,N = (-one)^Due south × 0.F × two^(-1022)

Denormalized grade can represent very small numbers airtight to zero, and null, which cannot exist represented in normalized form, equally shown in the above figure.

The minimum and maximum of denormalized floating-bespeak numbers are:

| Precision | Denormalized D(min) | Denormalized D(max) |

|---|---|---|

| Single | 0000 0001H 0 00000000 00000000000000000000001B Eastward = 0, F = 00000000000000000000001B D(min) = 0.0...i × 2^-126 = i × 2^-23 × 2^-126 = ii^-149 (≈1.four × x^-45) | 007F FFFFH 0 00000000 11111111111111111111111B E = 0, F = 11111111111111111111111B D(max) = 0.1...1 × two^-126 = (ane-ii^-23)×two^-126 (≈1.1754942 × x^-38) |

| Double | 0000 0000 0000 0001H D(min) = 0.0...1 × 2^-1022 = one × 2^-52 × ii^-1022 = two^-1074 (≈4.9 × 10^-324) | 001F FFFF FFFF FFFFH D(max) = 0.one...one × 2^-1022 = (1-2^-52)×ii^-1022 (≈4.4501477170144023 × 10^-308) |

Special Values

Zero: Zero cannot be represented in the normalized form, and must be represented in denormalized class with E=0 and F=0. There are two representations for zero: +0 with S=0 and -0 with Due south=1.

Infinity: The value of +infinity (due east.g., 1/0) and -infinity (eastward.grand., -ane/0) are represented with an exponent of all 1's (E = 255 for unmarried-precision and E = 2047 for double-precision), F=0, and S=0 (for +INF) and S=i (for -INF).

Not a Number (NaN): NaN denotes a value that cannot exist represented as real number (e.g. 0/0). NaN is represented with Exponent of all 1's (Due east = 255 for single-precision and E = 2047 for double-precision) and any non-zero fraction.

Graphic symbol Encoding

In computer memory, character are "encoded" (or "represented") using a chosen "character encoding schemes" (aka "grapheme fix", "charset", "character map", or "code page").

For example, in ASCII (likewise as Latin1, Unicode, and many other character sets):

- code numbers

65D (41H)to90D (5AH)represents'A'to'Z', respectively. - code numbers

97D (61H)to122D (7AH)represents'a'to'z', respectively. - code numbers

48D (30H)to57D (39H)represents'0'to'nine', respectively.

Information technology is important to note that the representation scheme must be known earlier a binary design tin can be interpreted. Eastward.g., the viii-bit pattern "0100 0010B" could represent anything under the dominicus known only to the person encoded it.

The most ordinarily-used character encoding schemes are: seven-bit ASCII (ISO/IEC 646) and 8-bit Latin-10 (ISO/IEC 8859-x) for western european characters, and Unicode (ISO/IEC 10646) for internationalization (i18n).

A 7-chip encoding scheme (such as ASCII) tin represent 128 characters and symbols. An 8-chip character encoding scheme (such as Latin-x) can represent 256 characters and symbols; whereas a sixteen-bit encoding scheme (such equally Unicode UCS-2) can represents 65,536 characters and symbols.

7-bit ASCII Code (aka United states-ASCII, ISO/IEC 646, ITU-T T.50)

- ASCII (American Standard Code for Information Interchange) is ane of the earlier character coding schemes.

- ASCII is originally a 7-bit code. It has been extended to 8-bit to meliorate use the 8-bit estimator retentivity organisation. (The 8th-bit was originally used for parity bank check in the early on computers.)

- Code numbers

32D (20H)to126D (7EH)are printable (displayable) characters as tabulated (bundled in hexadecimal and decimal) as follows:Hex 0 one 2 3 4 5 six 7 eight 9 A B C D E F 2 SP ! " # $ % & ' ( ) * + , - . / iii 0 i ii 3 four 5 6 seven 8 9 : ; < = > ? 4 @ A B C D E F One thousand H I J Yard L Thousand N O 5 P Q R S T U V W 10 Y Z [ \ ] ^ _ 6 ` a b c d e f g h i j g l chiliad n o 7 p q r s t u five due west x y z { | } ~

Dec 0 1 2 iii four five six 7 viii 9 3 SP ! " # $ % & ' iv ( ) * + , - . / 0 1 v two iii four five 6 7 8 9 : ; 6 < = > ? @ A B C D E seven F Thousand H I J K L M N O 8 P Q R S T U 5 W X Y 9 Z [ \ ] ^ _ ` a b c x d east f yard h i j grand l m 11 n o p q r south t u v w 12 x y z { | } ~ - Lawmaking number

32D (20H)is the blank or space graphic symbol. -

'0'to'ix':30H-39H (0011 0001B to 0011 1001B)or(0011 xxxxBwherexxxxis the equivalent integer value) -

'A'to'Z':41H-5AH (0101 0001B to 0101 1010B)or(010x xxxxB).'A'to'Z'are continuous without gap. -

'a'to'z':61H-7AH (0110 0001B to 0111 1010B)or(011x xxxxB).'A'to'Z'are too continuous without gap. Even so, there is a gap between capital letter and lowercase letters. To convert between upper and lowercase, flip the value of bit-5.

- Lawmaking number

- Code numbers

0D (00H)to31D (1FH), and127D (7FH)are special control characters, which are non-printable (non-displayable), as tabulated below. Many of these characters were used in the early days for transmission control (e.g., STX, ETX) and printer control (e.g., Form-Feed), which are at present obsolete. The remaining meaningful codes today are:-

09Hfor Tab ('\t'). -

0AHfor Line-Feed or newline (LF or'\north') and0DHfor Wagon-Render (CR or'r'), which are used as line delimiter (aka line separator, end-of-line) for text files. At that place is unfortunately no standard for line delimiter: Unixes and Mac use0AH(LF or "\n"), Windows use0D0AH(CR+LF or "\r\n"). Programming languages such as C/C++/Java (which was created on Unix) utilise0AH(LF or "\north"). - In programming languages such equally C/C++/Java, line-feed (

0AH) is denoted as'\due north', carriage-render (0DH) every bit'\r', tab (09H) as'\t'.

-

| Dec | HEX | Meaning | DEC | HEX | Meaning | ||

|---|---|---|---|---|---|---|---|

| 0 | 00 | NUL | Null | 17 | eleven | DC1 | Device Command one |

| 1 | 01 | SOH | Offset of Heading | xviii | 12 | DC2 | Device Command 2 |

| two | 02 | STX | Start of Text | 19 | xiii | DC3 | Device Control three |

| three | 03 | ETX | End of Text | twenty | fourteen | DC4 | Device Command 4 |

| four | 04 | EOT | End of Transmission | 21 | fifteen | NAK | Negative Ack. |

| v | 05 | ENQ | Enquiry | 22 | 16 | SYN | Sync. Idle |

| vi | 06 | ACK | Acknowledgment | 23 | 17 | ETB | End of Transmission |

| 7 | 07 | BEL | Bong | 24 | 18 | CAN | Abolish |

| viii | 08 | BS | Back Space '\b' | 25 | 19 | EM | Finish of Medium |

| ix | 09 | HT | Horizontal Tab '\t' | 26 | 1A | SUB | Substitute |

| 10 | 0A | LF | Line Feed '\n' | 27 | 1B | ESC | Escape |

| 11 | 0B | VT | Vertical Feed | 28 | 1C | IS4 | File Separator |

| 12 | 0C | FF | Form Feed 'f' | 29 | 1D | IS3 | Grouping Separator |

| xiii | 0D | CR | Wagon Return '\r' | 30 | 1E | IS2 | Record Separator |

| 14 | 0E | And so | Shift Out | 31 | 1F | IS1 | Unit Separator |

| fifteen | 0F | SI | Shift In | ||||

| xvi | 10 | DLE | Datalink Escape | 127 | 7F | DEL | Delete |

8-bit Latin-1 (aka ISO/IEC 8859-1)

ISO/IEC-8859 is a collection of 8-bit grapheme encoding standards for the western languages.

ISO/IEC 8859-1, aka Latin alphabet No. 1, or Latin-1 in short, is the almost commonly-used encoding scheme for western european languages. It has 191 printable characters from the latin script, which covers languages like English, High german, Italian, Portuguese and Spanish. Latin-i is backward compatible with the vii-flake US-ASCII code. That is, the get-go 128 characters in Latin-1 (code numbers 0 to 127 (7FH)), is the same every bit Usa-ASCII. Lawmaking numbers 128 (80H) to 159 (9FH) are non assigned. Code numbers 160 (A0H) to 255 (FFH) are given as follows:

| Hex | 0 | 1 | 2 | 3 | iv | 5 | vi | 7 | viii | 9 | A | B | C | D | Due east | F |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| A | NBSP | ¡ | ¢ | £ | ¤ | ¥ | ¦ | § | ¨ | © | ª | « | ¬ | SHY | ® | ¯ |

| B | ° | ± | ² | ³ | ´ | µ | ¶ | · | ¸ | ¹ | º | » | ¼ | ½ | ¾ | ¿ |

| C | À | Á | Â | Ã | Ä | Å | Æ | Ç | È | É | Ê | Ë | Ì | Í | Î | Ï |

| D | Ð | Ñ | Ò | Ó | Ô | Õ | Ö | × | Ø | Ù | Ú | Û | Ü | Ý | Þ | ß |

| E | à | á | â | ã | ä | å | æ | ç | è | é | ê | ë | ì | í | î | ï |

| F | ð | ñ | ò | ó | ô | õ | ö | ÷ | ø | ù | ú | û | ü | ý | þ | ÿ |

ISO/IEC-8859 has 16 parts. Besides the most commonly-used Part 1, Part 2 is meant for Fundamental European (Shine, Czech, Hungarian, etc), Office 3 for South European (Turkish, etc), Part 4 for North European (Estonian, Latvian, etc), Function five for Cyrillic, Part 6 for Arabic, Part seven for Greek, Part 8 for Hebrew, Part 9 for Turkish, Part ten for Nordic, Part xi for Thai, Part 12 was abandon, Function 13 for Baltic Rim, Office 14 for Celtic, Part fifteen for French, Finnish, etc. Part 16 for South-Eastern European.

Other 8-chip Extension of US-ASCII (ASCII Extensions)

Beside the standardized ISO-8859-x, there are many 8-bit ASCII extensions, which are not compatible with each others.

ANSI (American National Standards Constitute) (aka Windows-1252, or Windows Codepage 1252): for Latin alphabets used in the legacy DOS/Windows systems. It is a superset of ISO-8859-1 with code numbers 128 (80H) to 159 (9FH) assigned to displayable characters, such as "smart" single-quotes and double-quotes. A mutual problem in web browsers is that all the quotes and apostrophes (produced by "smart quotes" in some Microsoft software) were replaced with question marks or some strange symbols. It it because the certificate is labeled as ISO-8859-1 (instead of Windows-1252), where these lawmaking numbers are undefined. About mod browsers and e-mail clients treat charset ISO-8859-1 every bit Windows-1252 in guild to accommodate such mis-labeling.

| Hex | 0 | 1 | ii | three | 4 | v | 6 | 7 | 8 | 9 | A | B | C | D | E | F |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 8 | € | ‚ | ƒ | „ | … | † | ‡ | ˆ | ‰ | Š | ‹ | Œ | Ž | |||

| 9 | ' | ' | " | " | • | – | — | ™ | š | › | œ | ž | Ÿ |

EBCDIC (Extended Binary Coded Decimal Interchange Code): Used in the early on IBM computers.

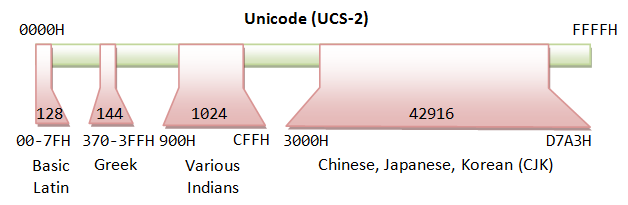

Unicode (aka ISO/IEC 10646 Universal Character Set)

Before Unicode, no single grapheme encoding scheme could stand for characters in all languages. For example, western european uses several encoding schemes (in the ISO-8859-x family). Even a single language like Chinese has a few encoding schemes (GB2312/GBK, BIG5). Many encoding schemes are in conflict of each other, i.east., the aforementioned lawmaking number is assigned to different characters.

Unicode aims to provide a standard character encoding scheme, which is universal, efficient, uniform and unambiguous. Unicode standard is maintained by a non-turn a profit organization called the Unicode Consortium (@ world wide web.unicode.org). Unicode is an ISO/IEC standard 10646.

Unicode is backward compatible with the vii-bit Usa-ASCII and eight-bit Latin-1 (ISO-8859-1). That is, the first 128 characters are the aforementioned every bit U.s.-ASCII; and the first 256 characters are the same equally Latin-1.

Unicode originally uses 16 bits (called UCS-2 or Unicode Character Prepare - 2 byte), which tin represent upwardly to 65,536 characters. It has since been expanded to more than sixteen bits, currently stands at 21 bits. The range of the legal codes in ISO/IEC 10646 is now from U+0000H to U+10FFFFH (21 $.25 or about two million characters), covering all electric current and ancient historical scripts. The original sixteen-bit range of U+0000H to U+FFFFH (65536 characters) is known equally Basic Multilingual Airplane (BMP), covering all the major languages in employ currently. The characters exterior BMP are called Supplementary Characters, which are non often-used.

Unicode has two encoding schemes:

- UCS-2 (Universal Character Fix - ii Byte): Uses 2 bytes (16 bits), covering 65,536 characters in the BMP. BMP is sufficient for most of the applications. UCS-2 is at present obsolete.

- UCS-four (Universal Character Set - iv Byte): Uses iv bytes (32 bits), covering BMP and the supplementary characters.

UTF-eight (Unicode Transformation Format - 8-chip)

The 16/32-bit Unicode (UCS-ii/4) is grossly inefficient if the certificate contains mainly ASCII characters, because each character occupies 2 bytes of storage. Variable-length encoding schemes, such as UTF-8, which uses ane-4 bytes to represent a grapheme, was devised to amend the efficiency. In UTF-8, the 128 commonly-used The states-ASCII characters use only 1 byte, but some less-commonly characters may require up to iv bytes. Overall, the efficiency improved for document containing mainly Us-ASCII texts.

The transformation between Unicode and UTF-eight is as follows:

| Bits | Unicode | UTF-viii Code | Bytes |

|---|---|---|---|

| 7 | 00000000 0xxxxxxx | 0xxxxxxx | 1 (ASCII) |

| xi | 00000yyy yyxxxxxx | 110yyyyy 10xxxxxx | 2 |

| xvi | zzzzyyyy yyxxxxxx | 1110zzzz 10yyyyyy 10xxxxxx | 3 |

| 21 | 000uuuuu zzzzyyyy yyxxxxxx | 11110uuu 10uuzzzz 10yyyyyy 10xxxxxx | 4 |

In UTF-8, Unicode numbers corresponding to the 7-fleck ASCII characters are padded with a leading zero; thus has the same value as ASCII. Hence, UTF-8 can be used with all software using ASCII. Unicode numbers of 128 and above, which are less frequently used, are encoded using more than bytes (2-four bytes). UTF-8 generally requires less storage and is compatible with ASCII. The drawback of UTF-viii is more than processing power needed to unpack the code due to its variable length. UTF-8 is the almost popular format for Unicode.

Notes:

- UTF-8 uses 1-iii bytes for the characters in BMP (16-chip), and four bytes for supplementary characters outside BMP (21-flake).

- The 128 ASCII characters (basic Latin letters, digits, and punctuation signs) use one byte. Almost European and Middle East characters use a ii-byte sequence, which includes extended Latin messages (with tilde, macron, astute, grave and other accents), Greek, Armenian, Hebrew, Arabic, and others. Chinese, Japanese and Korean (CJK) employ three-byte sequences.

- All the bytes, except the 128 ASCII characters, accept a leading

'1'bit. In other words, the ASCII bytes, with a leading'0'chip, tin can be identified and decoded easily.

Example: 您好 (Unicode: 60A8H 597DH)

Unicode (UCS-ii) is 60A8H = 0110 0000 ten 101000B ⇒ UTF-viii is 11100110 10000010 10101000B = E6 82 A8H Unicode (UCS-2) is 597DH = 0101 1001 01 111101B ⇒ UTF-8 is 11100101 10100101 10111101B = E5 A5 BDH

UTF-16 (Unicode Transformation Format - sixteen-bit)

UTF-16 is a variable-length Unicode grapheme encoding scheme, which uses 2 to 4 bytes. UTF-16 is not commonly used. The transformation table is as follows:

| Unicode | UTF-16 Code | Bytes |

|---|---|---|

| xxxxxxxx xxxxxxxx | Same as UCS-2 - no encoding | 2 |

| 000uuuuu zzzzyyyy yyxxxxxx (uuuuu≠0) | 110110ww wwzzzzyy 110111yy yyxxxxxx (wwww = uuuuu - ane) | four |

Take note that for the 65536 characters in BMP, the UTF-16 is the same as UCS-2 (2 bytes). However, 4 bytes are used for the supplementary characters exterior the BMP.

For BMP characters, UTF-16 is the aforementioned as UCS-2. For supplementary characters, each character requires a pair xvi-flake values, the commencement from the high-surrogates range, (\uD800-\uDBFF), the second from the low-surrogates range (\uDC00-\uDFFF).

UTF-32 (Unicode Transformation Format - 32-fleck)

Aforementioned every bit UCS-4, which uses four bytes for each graphic symbol - unencoded.

Formats of Multi-Byte (due east.g., Unicode) Text Files

Endianess (or byte-order): For a multi-byte character, you need to have care of the club of the bytes in storage. In big endian, the near significant byte is stored at the memory location with the lowest accost (big byte offset). In fiddling endian, the nigh significant byte is stored at the memory location with the highest address (little byte first). For example, 您 (with Unicode number of 60A8H) is stored every bit 60 A8 in big endian; and stored as A8 lx in little endian. Big endian, which produces a more readable hex dump, is more commonly-used, and is often the default.

BOM (Byte Order Marker): BOM is a special Unicode graphic symbol having code number of FEFFH, which is used to differentiate big-endian and trivial-endian. For big-endian, BOM appears equally FE FFH in the storage. For little-endian, BOM appears as FF FEH. Unicode reserves these two code numbers to forestall information technology from crashing with another grapheme.

Unicode text files could take on these formats:

- Big Endian: UCS-2BE, UTF-16BE, UTF-32BE.

- Little Endian: UCS-2LE, UTF-16LE, UTF-32LE.

- UTF-sixteen with BOM. The first character of the file is a BOM character, which specifies the endianess. For big-endian, BOM appears every bit

Iron FFHin the storage. For little-endian, BOM appears asFF FEH.

UTF-8 file is ever stored every bit large endian. BOM plays no function. However, in some systems (in detail Windows), a BOM is added as the first character in the UTF-viii file as the signature to identity the file as UTF-8 encoded. The BOM character (FEFFH) is encoded in UTF-viii every bit EF BB BF. Adding a BOM as the first character of the file is not recommended, equally it may be incorrectly interpreted in other system. You tin take a UTF-eight file without BOM.

Formats of Text Files

Line Delimiter or End-Of-Line (EOL): Sometimes, when you use the Windows NotePad to open a text file (created in Unix or Mac), all the lines are joined together. This is because different operating platforms use dissimilar character as the so-called line delimiter (or end-of-line or EOL). Two non-printable command characters are involved: 0AH (Line-Feed or LF) and 0DH (Carriage-Return or CR).

- Windows/DOS uses

OD0AH(CR+LF or "\r\northward") as EOL. - Unix and Mac use

0AH(LF or "\due north") only.

Finish-of-File (EOF): [TODO]

Windows' CMD Codepage

Character encoding scheme (charset) in Windows is chosen codepage. In CMD shell, you tin can result command "chcp" to display the current codepage, or "chcp codepage-number" to change the codepage.

Take note that:

- The default codepage 437 (used in the original DOS) is an 8-fleck grapheme prepare called Extended ASCII, which is different from Latin-1 for code numbers above 127.

- Codepage 1252 (Windows-1252), is not exactly the same as Latin-1. It assigns code number 80H to 9FH to letters and punctuation, such as smart unmarried-quotes and double-quotes. A mutual problem in browser that display quotes and apostrophe in question marks or boxes is because the page is supposed to be Windows-1252, only mislabelled as ISO-8859-1.

- For internationalization and chinese graphic symbol set: codepage 65001 for UTF8, codepage 1201 for UCS-2BE, codepage 1200 for UCS-2LE, codepage 936 for chinese characters in GB2312, codepage 950 for chinese characters in Big5.

Chinese Character Sets

Unicode supports all languages, including asian languages like Chinese (both simplified and traditional characters), Japanese and Korean (collectively called CJK). At that place are more than 20,000 CJK characters in Unicode. Unicode characters are often encoded in the UTF-viii scheme, which unfortunately, requires 3 bytes for each CJK character, instead of 2 bytes in the unencoded UCS-2 (UTF-16).

Worse however, in that location are as well diverse chinese character sets, which is not compatible with Unicode:

- GB2312/GBK: for simplified chinese characters. GB2312 uses 2 bytes for each chinese graphic symbol. The almost pregnant flake (MSB) of both bytes are set up to one to co-exist with 7-bit ASCII with the MSB of 0. There are near 6700 characters. GBK is an extension of GB2312, which include more characters too as traditional chinese characters.

- BIG5: for traditional chinese characters BIG5 also uses ii bytes for each chinese graphic symbol. The nearly significant bit of both bytes are as well set to 1. BIG5 is not compatible with GBK, i.e., the same lawmaking number is assigned to different graphic symbol.

For example, the world is fabricated more interesting with these many standards:

| Standard | Characters | Codes | |

|---|---|---|---|

| Simplified | GB2312 | 和谐 | BACD D0B3 |

| UCS-2 | 和谐 | 548C 8C10 | |

| UTF-8 | 和谐 | E5928C E8B090 | |

| Traditional | BIG5 | 和諧 | A94D BFD3 |

| UCS-2 | 和諧 | 548C 8AE7 | |

| UTF-8 | 和諧 | E5928C E8ABA7 |

Notes for Windows' CMD Users: To display the chinese character correctly in CMD beat, you need to choose the right codepage, e.chiliad., 65001 for UTF8, 936 for GB2312/GBK, 950 for Big5, 1201 for UCS-2BE, 1200 for UCS-2LE, 437 for the original DOS. You lot can employ control "chcp" to display the current code page and command "chcp codepage_number " to change the codepage. Y'all also have to choose a font that can brandish the characters (due east.thou., Courier New, Consolas or Lucida Console, Non Raster font).

Collating Sequences (for Ranking Characters)

A string consists of a sequence of characters in upper or lower cases, e.g., "apple tree", "Male child", "Cat". In sorting or comparing strings, if we order the characters co-ordinate to the underlying code numbers (due east.thousand., United states of america-ASCII) character-past-character, the order for the instance would be "Male child", "apple", "Cat" considering uppercase letters accept a smaller code number than lowercase letters. This does not hold with the so-chosen dictionary order, where the aforementioned uppercase and lowercase letters have the same rank. Another common problem in ordering strings is "x" (x) at times is ordered in front end of "1" to "9".

Hence, in sorting or comparison of strings, a so-called collating sequence (or collation) is often divers, which specifies the ranks for letters (uppercase, lowercase), numbers, and special symbols. In that location are many collating sequences available. It is entirely upwards to you to cull a collating sequence to meet your application's specific requirements. Some case-insensitive lexicon-order collating sequences have the aforementioned rank for same uppercase and lowercase messages, i.e., 'A', 'a' ⇒ 'B', 'b' ⇒ ... ⇒ 'Z', 'z'. Some case-sensitive dictionary-social club collating sequences put the uppercase letter before its lowercase counterpart, i.east., 'A' ⇒'B' ⇒ 'C'... ⇒ 'a' ⇒ 'b' ⇒ . Typically, space is ranked before digits 'c'...'0' to 'nine', followed past the alphabets.

Collating sequence is often language dependent, equally different languages employ dissimilar sets of characters (e.grand., á, é, a, α) with their ain orders.

For Coffee Programmers - java.nio.Charset

JDK ane.4 introduced a new coffee.nio.charset package to support encoding/decoding of characters from UCS-2 used internally in Coffee program to whatever supported charset used by external devices.

Example: The following plan encodes some Unicode texts in diverse encoding scheme, and display the Hex codes of the encoded byte sequences.

import java.nio.ByteBuffer; import java.nio.CharBuffer; import java.nio.charset.Charset; public grade TestCharsetEncodeDecode { public static void primary(String[] args) { Cord[] charsetNames = {"United states-ASCII", "ISO-8859-1", "UTF-viii", "UTF-16", "UTF-16BE", "UTF-16LE", "GBK", "BIG5"}; String message = "Howdy,您好!"; System.out.printf("%10s: ", "UCS-2"); for (int i = 0; i < message.length(); i++) { System.out.printf("%04X ", (int)message.charAt(i)); } Organization.out.println(); for (Cord charsetName: charsetNames) { Charset charset = Charset.forName(charsetName); Organization.out.printf("%10s: ", charset.name()); ByteBuffer bb = charset.encode(bulletin); while (bb.hasRemaining()) { Organization.out.printf("%02X ", bb.get()); } System.out.println(); bb.rewind(); } } } UCS-2: 0048 0069 002C 60A8 597D 0021 U.s.-ASCII: 48 69 2C 3F 3F 21 ISO-8859-one: 48 69 2C 3F 3F 21 UTF-viii: 48 69 2C E6 82 A8 E5 A5 BD 21 UTF-16: FE FF 00 48 00 69 00 2C threescore A8 59 7D 00 21 UTF-16BE: 00 48 00 69 00 2C 60 A8 59 7D 00 21 UTF-16LE: 48 00 69 00 2C 00 A8 60 7D 59 21 00 GBK: 48 69 2C C4 FA BA C3 21 Big5: 48 69 2C B1 7A A6 6E 21

For Java Programmers - char and String

The char data type are based on the original 16-bit Unicode standard called UCS-2. The Unicode has since evolved to 21 bits, with code range of U+0000 to U+10FFFF. The prepare of characters from U+0000 to U+FFFF is known equally the Basic Multilingual Plane (BMP). Characters to a higher place U+FFFF are called supplementary characters. A 16-bit Java char cannot hold a supplementary character.

Recall that in the UTF-xvi encoding scheme, a BMP characters uses 2 bytes. It is the same as UCS-2. A supplementary character uses 4 bytes. and requires a pair of xvi-scrap values, the starting time from the high-surrogates range, (\uD800-\uDBFF), the second from the low-surrogates range (\uDC00-\uDFFF).

In Java, a String is a sequences of Unicode characters. Coffee, in fact, uses UTF-16 for String and StringBuffer. For BMP characters, they are the same as UCS-2. For supplementary characters, each characters requires a pair of char values.

Java methods that accept a 16-bit char value does non back up supplementary characters. Methods that accept a 32-bit int value back up all Unicode characters (in the lower 21 $.25), including supplementary characters.

This is meant to be an academic discussion. I have even so to encounter the use of supplementary characters!

Displaying Hex Values & Hex Editors

At times, y'all may need to brandish the hex values of a file, especially in dealing with Unicode characters. A Hex Editor is a handy tool that a skillful programmer should possess in his/her toolbox. There are many freeware/shareware Hex Editor available. Try google "Hex Editor".

I used the followings:

- NotePad++ with Hex Editor Plug-in: Open-source and free. Y'all can toggle betwixt Hex view and Normal view by pushing the "H" button.

- PSPad: Freeware. You tin can toggle to Hex view by choosing "View" menu and select "Hex Edit Mode".

- TextPad: Shareware without expiration period. To view the Hex value, you demand to "open up" the file by choosing the file format of "binary" (??).

- UltraEdit: Shareware, not gratuitous, 30-day trial only.

Permit me know if yous have a better pick, which is fast to launch, easy to use, can toggle between Hex and normal view, complimentary, ....

The following Java program can exist used to display hex code for Java Primitives (integer, character and floating-point):

ane two three 4 5 6 7 eight ix 10 eleven 12 13 14 15 16 17 18 xix 20 21 22 23 24 25 26 27 28 29 30 | public class PrintHexCode { public static void principal(String[] args) { int i = 12345; Arrangement.out.println("Decimal is " + i); System.out.println("Hex is " + Integer.toHexString(i)); System.out.println("Binary is " + Integer.toBinaryString(i)); Organisation.out.println("Octal is " + Integer.toOctalString(i)); System.out.printf("Hex is %10\n", i); System.out.printf("Octal is %o\n", i); char c = 'a'; System.out.println("Character is " + c); System.out.printf("Character is %c\n", c); System.out.printf("Hex is %x\northward", (brusque)c); System.out.printf("Decimal is %d\n", (curt)c); float f = 3.5f; System.out.println("Decimal is " + f); System.out.println(Bladder.toHexString(f)); f = -0.75f; System.out.println("Decimal is " + f); Organization.out.println(Float.toHexString(f)); double d = 11.22; System.out.println("Decimal is " + d); System.out.println(Double.toHexString(d)); } } |

In Eclipse, yous tin can view the hex lawmaking for integer primitive Java variables in debug mode as follows: In debug perspective, "Variable" panel ⇒ Select the "card" (inverted triangle) ⇒ Java ⇒ Java Preferences... ⇒ Archaic Display Options ⇒ Check "Display hexadecimal values (byte, short, char, int, long)".

Summary - Why Carp about Data Representation?

Integer number 1, floating-point number 1.0 character symbol 'one', and cord "ane" are totally dissimilar inside the figurer memory. You need to know the departure to write good and high-operation programs.

- In eight-chip signed integer, integer number

1is represented as00000001B. - In viii-scrap unsigned integer, integer number

1is represented as00000001B. - In 16-bit signed integer, integer number

1is represented as00000000 00000001B. - In 32-bit signed integer, integer number

1is represented as000000000000000000000000 00000001B. - In 32-bit floating-point representation, number

1.0is represented as0 01111111 0000000 00000000 00000000B, i.e.,Southward=0,E=127,F=0. - In 64-bit floating-point representation, number

1.0is represented as0 01111111111 0000 00000000 00000000 00000000 00000000 00000000 00000000B, i.e.,S=0,East=1023,F=0. - In 8-chip Latin-1, the character symbol

'1'is represented as00110001B(or31H). - In 16-bit UCS-2, the grapheme symbol

'one'is represented as00000000 00110001B. - In UTF-viii, the character symbol

'one'is represented equally00110001B.

If you "add" a 16-bit signed integer 1 and Latin-1 character 'one' or a string "1", you could get a surprise.

Exercises (Data Representation)

For the following 16-bit codes:

0000 0000 0010 1010; m 0000 0010 1010;

Give their values, if they are representing:

- a 16-bit unsigned integer;

- a 16-bit signed integer;

- ii 8-fleck unsigned integers;

- two viii-bit signed integers;

- a 16-bit Unicode characters;

- ii 8-bit ISO-8859-1 characters.

Ans: (i) 42, 32810; (ii) 42, -32726; (iii) 0, 42; 128, 42; (4) 0, 42; -128, 42; (5) '*'; '耪'; (6) NUL, '*'; PAD, '*'.

REFERENCES & RESOURCES

- (Floating-Signal Number Specification) IEEE 754 (1985), "IEEE Standard for Binary Floating-Betoken Arithmetic".

- (ASCII Specification) ISO/IEC 646 (1991) (or ITU-T T.50-1992), "Information technology - vii-bit coded character set for information interchange".

- (Latin-I Specification) ISO/IEC 8859-one, "It - 8-bit single-byte coded graphic graphic symbol sets - Part 1: Latin alphabet No. 1".

- (Unicode Specification) ISO/IEC 10646, "Information technology - Universal Multiple-Octet Coded Character Set (UCS)".

- Unicode Consortium @ http://world wide web.unicode.org.

Source: https://www3.ntu.edu.sg/home/ehchua/programming/java/datarepresentation.html

Post a Comment for "Dean Martinã¢â‚¬â„¢s â€ëœbaby, Itã¢â‚¬â„¢s Cold Outsideã¢â‚¬â„¢ Hits Top 10 on Digital Sales Chart for First Time"